Hi, all Common Lispers.

It's been 7 years since I talked about Woo at European Lisp Symposium. I have heard that several people are using Woo for running their web services. I am grateful for that.

I quit the company I was working for when I developed Woo. Today, I'm writing a payment service in Common Lisp as ever in another company. Not surprisingly, I'm using Woo there as well, and so far have had no performance problems or other operational difficulties.

But on the other hand, some people are still reckless enough to try to run web services using Hunchentoot. And, some people complain about the lack of articles about Woo.

Sorry for my negligence in not keeping updating the information and publishing articles. Therefore, it may be worthful to take Woo as the topic even today.

What is Woo?

Woo is a high-performance web server written in Common Lisp.

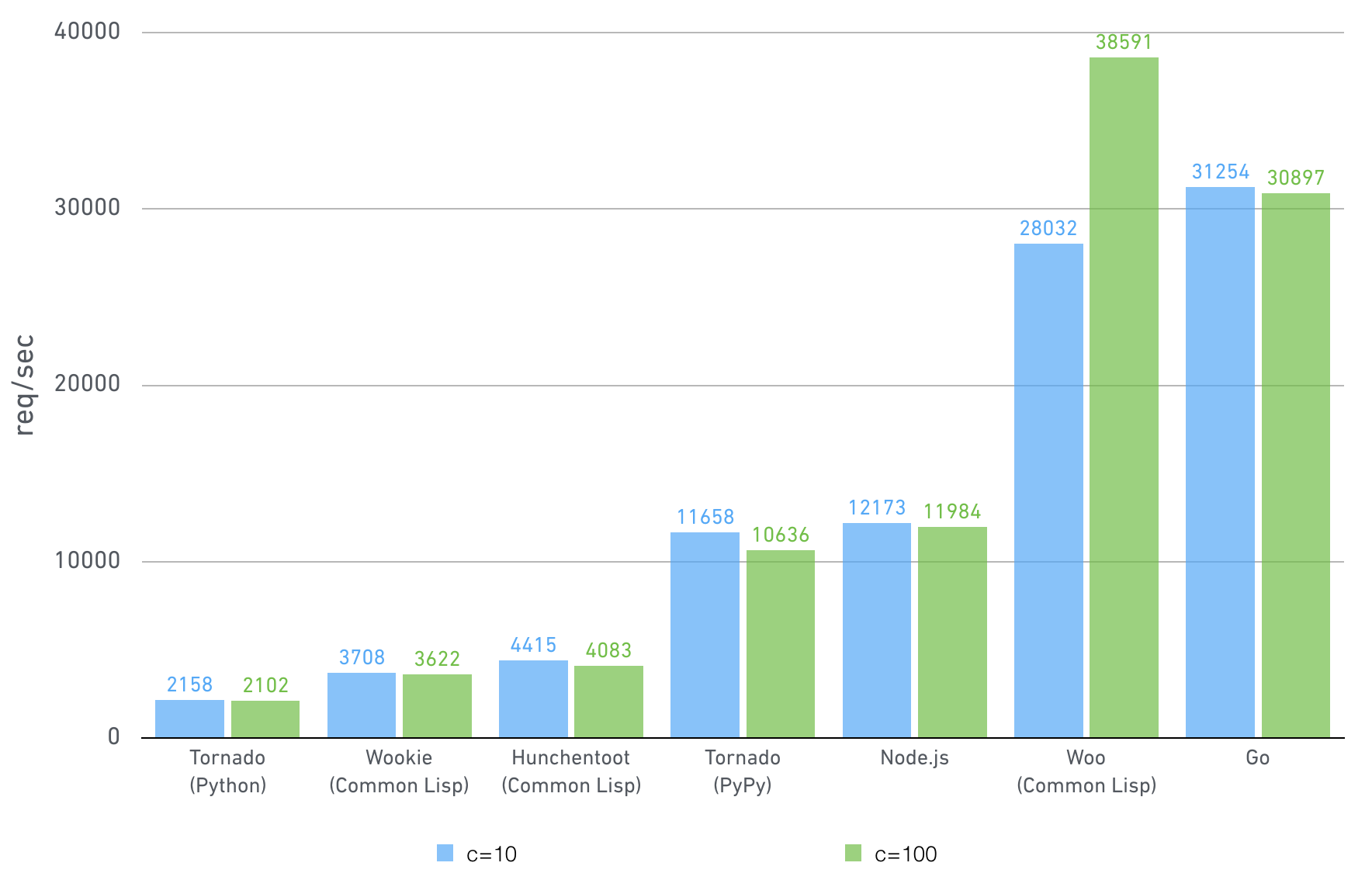

It's almost the same level as Go's web server in performance and several times better than other Common Lisp servers, like Hunchentoot.

What's different? Not only eliminate bottlenecks by tuning the Common Lisp code but the architecture is designed to handle many concurrent requests efficiently.

Compared to Hunchentoot

Hunchentoot is the most popular web server. According to Quicklisp download stats in April 2022, Hunchentoot is the only web server in the top 100 (ref. Woo is 302th).

An excellent point of Hunchentoot is that it's written in portable Common Lisp. It works on Linux, macOS, and Windows with many Lisp implementations. No external libraries are required.

On the other hand, there are concerns about using it as an HTTP server open to the world.

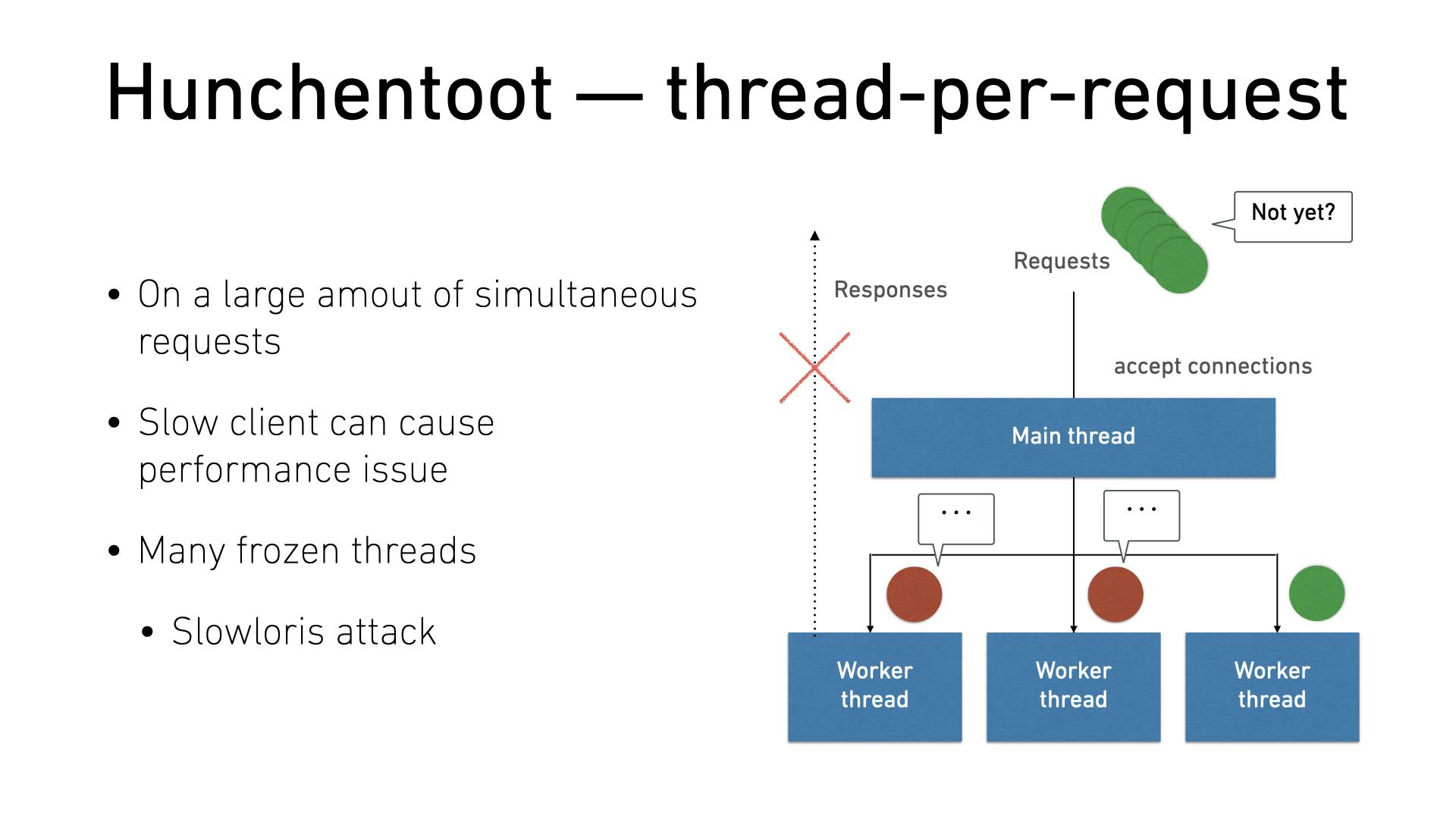

Because Hunchentoot takes a thread-per-request approach to handle requests.

The disadvantage of this architecture is that it is not good at handling large numbers of simultaneous requests.

Hunchentoot creates a thread when accepting a new connection, done sending a response, and terminates the thread when it's disconnected. Therefore, more concurrent threads are required when it takes longer to process a slow client (e.g., network transmission time).

It doesn't matter if every client works fast enough. In reality, however, some clients are slow or unstable, for example, smartphone users.

There is also a DoS attack, which intentionally makes large numbers of slow simultaneous connections, called Slowloris attack. Web services running on Hunchentoot can be instantly inaccessible by this attack. The bad news is that you can easily find a script to make Slowloris attack on the web.

Compared to Wookie

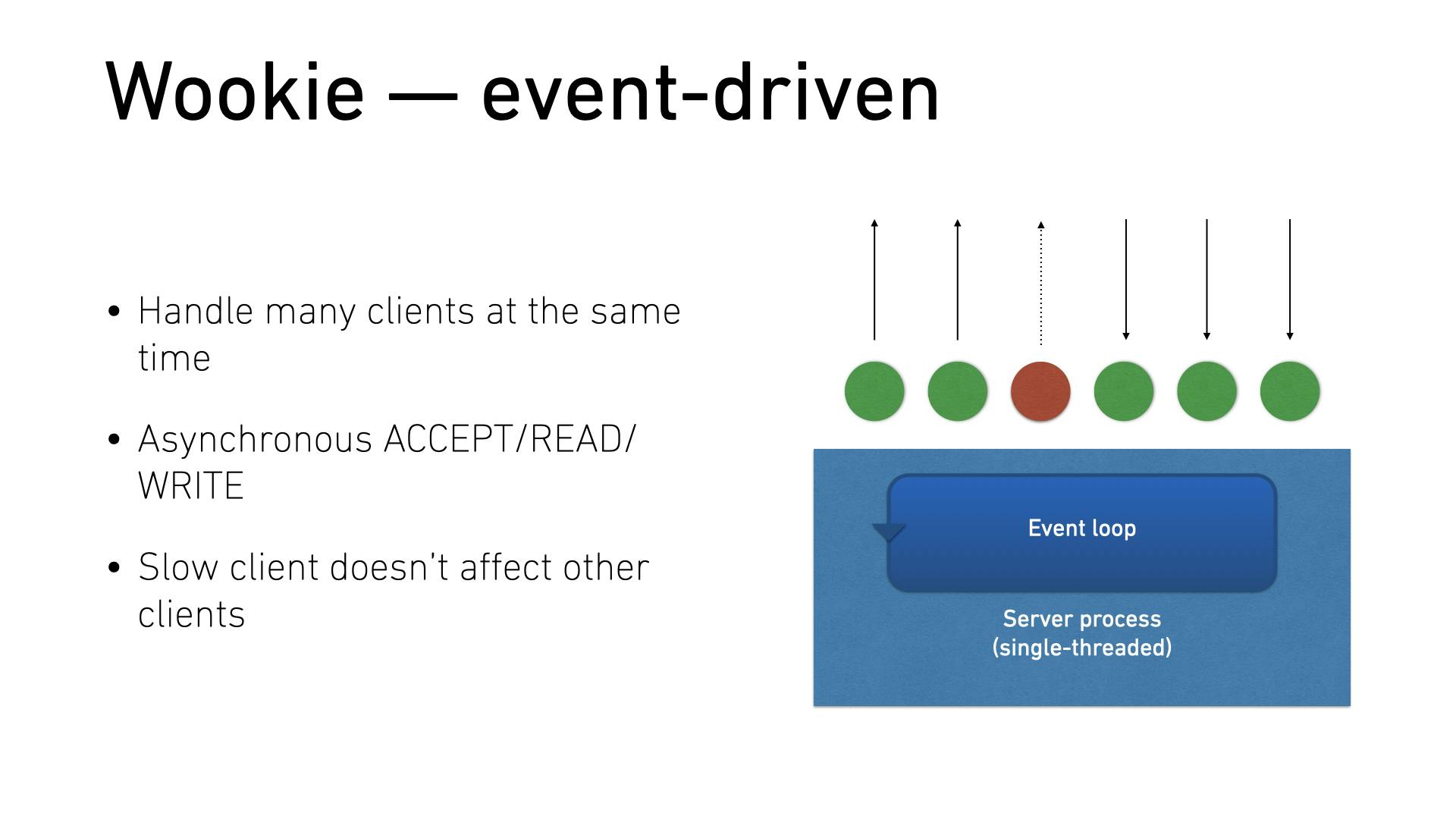

Event-driven is another approach to handling a massive amount of simultaneous connections. Let's take Wookie as an example.

In this model, all connection I/O is processed asynchronously, so the speed and stability of the connection do not affect other connections.

Of course, this architecture also has its drawbacks as it works in a single thread, which means only one process can be executed at a time. When a response is being sent to one client, it is not possible to read another client's request.

Wookie's throughput is slightly worse than Hunchentoot for simple HTTP request and response iterations in my benchmark.

Besides that, it is more advantageous for protocols such as WebSocket, in which small chunks are exchanged asynchronously.

Wookie depends on libuv, C library to support asynchronous I/O. Although installing an external library is bothersome, libuv is used internally in Node.js, so it is not so difficult to do it in most environments, including Windows.

Another web server house is event-driven but written in portable Common Lisp. Its event-loop is implemented with cl:loop and usocket:wait-for-input. If the performance doesn't matter, it would be another option.

Multithreaded Event-driven

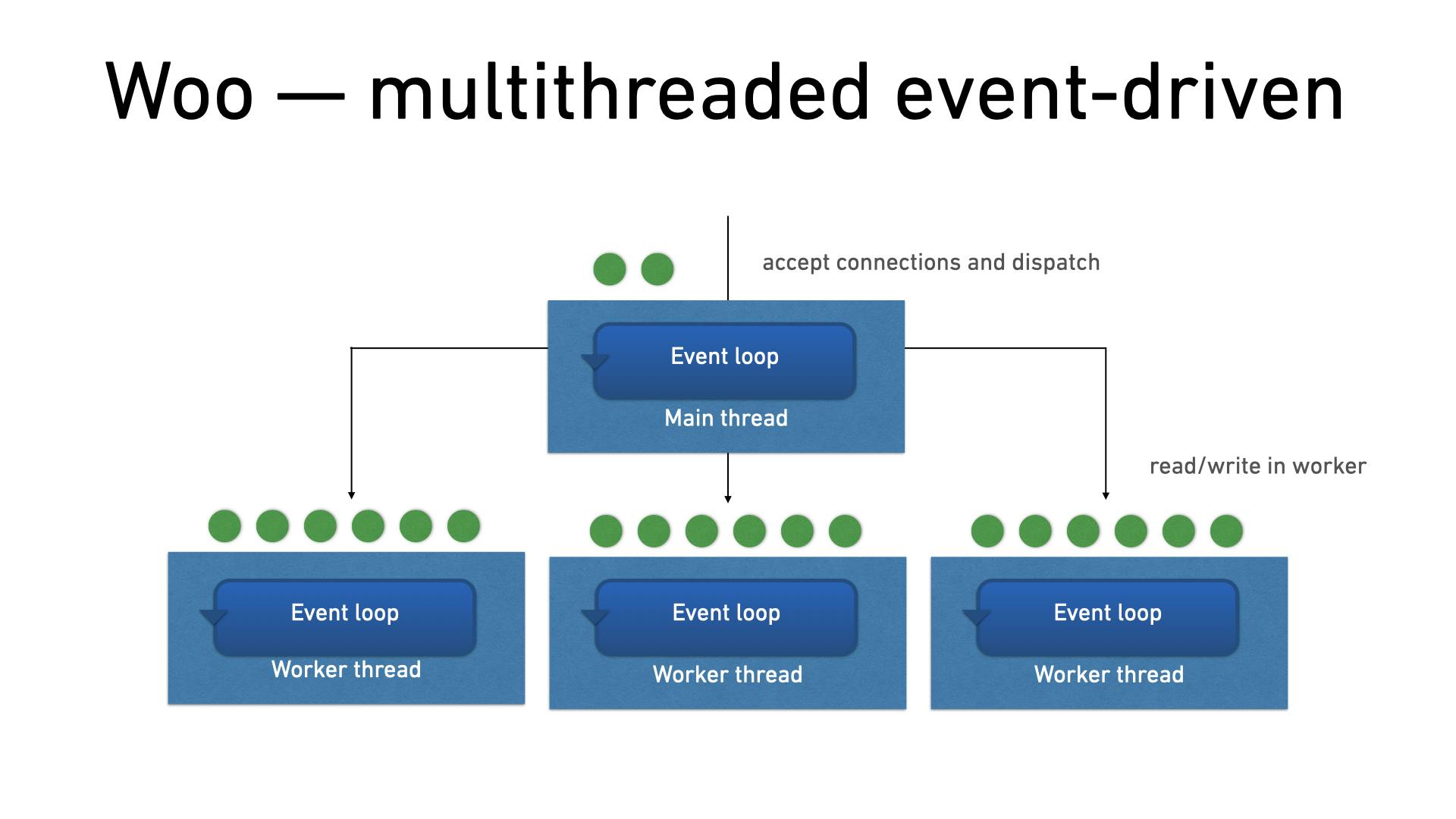

Woo also adopts the event-driven model, except it has multiple event loops.

First, it accepts a connection in the main thread, dispatches to pre-created worker threads, and processes requests and responses with asynchronous I/O in each worker.

That is why its throughput is exceptionally high: it processes multiple requests simultaneously in worker threads.

In addition, Woo uses libev while Wookie uses libuv. libev runs fast since it is a pretty small library that only wraps the async I/O API between each OS, like epoll, kqueue, and poll. Its downside is less platform support. Especially, it doesn't support Windows. However, I don't think it will be a problem in most cases since it's rare to use Windows for a web server.

Woo is Clack-compliant

Woo's feature I'd like to mention is that it is Clack-compliant.

Clack is an abstraction layer for Common Lisp web servers. It allows running a web application on different web servers which follows Clack standard without any changes.

Since Woo supports Clack natively, it can run Lack applications without any libraries. I want to introduce what Clack and Lack are in the other article.

Running inside Docker

Lastly, let's run Woo inside a Docker container.

Files

These 3 files are necessary.

Dockerfileentrypoint.shapp.lisp

Dockerfile

FROM fukamachi/sbcl

ENV PORT 5000

RUN set -x; \

apt-get update && apt-get -y install --no-install-recommends \

libev-dev \

gcc \

libc6-dev && \

rm -rf /var/lib/apt/lists/*

WORKDIR /app

COPY entrypoint.sh /srv

RUN set -x; \

ros install clack woo

ENTRYPOINT ["/srv/entrypoint.sh"]

CMD ["app.lisp"]

entrypoint.sh

A script to start a web server, Woo, in this case.

#!/bin/bash

exec clackup --server woo --debug nil --address "0.0.0.0" --port "$PORT" "$@"

app.lisp

A Lack application is written in this file. See Lack’s documentation for the detail.

(lambda (env)

(declare (ignore env))

'(200 () ("Hello from Woo")))

Build

"`bash $ docker build -t server-app-test .

### Run

```bash

$ docker run --rm -it -p 5000:5000 -v $PWD:/app server-app-test

Then, open localhost:5000 with your browser.

Summary

I showed how Woo is different from other web servers and how to run it with Docker.

Clack allows switching web servers easily without modifying the application code. In the case of Quickdocs.org, I use Hunchentoot for development and use Woo for the production environment.

I will introduce Clack/Lack in the next article.